This is a multi-part series about the automation of VMware vSphere template builds. A template is a pre-installed virtual machine that acts as master image for cloning virtual machines from it. In this series I am describing how to get started on automating the process. As I am writing these blog post while working on the implementation some aspects may seem “rough around the edges”.

- Part 1: Overview/Motivation

- Part 2: Building a pipeline for a MVP

- Part 3: An overview of packer

- Part 4: Concourse elements and extending the pipeline

If you want to know more about the topic here is an awesome blog series about automated template management from Eric Lee - many thanks to Timo Sugliani for the recommendation.

templates!

vSphere templates have been there forever and ages, we all use them, and they are awesome, right?

Everyone can use the same baseline for all virtual machines. There is no need to juggle with drivers, installation ISOs, missing product keys, updates and so on - you can just start to consume.

To put it another way, templates are the foundation on which most of your business-critical apps runs on.

The problem with template management

While templates are great there are some issues that surface over time.

Clutter:

Once you created a template and life was good. Then came an update to the operating system/driver/…, you cloned your template, updated the components and released the new version. Well, all the old stuff is kept inside the template and you keep piling on more stuff in there with every update cycle.Auditing/Change trail:

Okay, you are not affected by clutter because for every update you build a new template. Have you automated it yet or how to you ensure that the template has the same settings every time you create it?

Or is there than more one person that handles the template. So what did Jeff change while you were on vacation? Do you need a change request for modifying your templates or will every modification go on unnoticed in your subsequently cloned VMs until it blows up?Sprawl:

It starts with an Ubuntu 16.04 LTS. Then someone needs a newer release and a 18.04 LTS template is provided. Here, not only 18.04.03 but also 18.04.02 was needed because something wasn’t working on the newer version. Yet another team wanted to use the 16.04 LTS as kubernetes worker/master VM but needed a different setup (e.g. without swap). Here we are and didn’t even consider the situation on the Windows side with full, core and nano installations, service packs, …

Before you know it there are 20 templates based on different Windows and Linux flavors, specialized installations and update releases.

The list is far from complete but I think the punchline is clear, you will lose the battle with manual maintenance over time.

Overcoming the challenges

I am trying to address some of the issues (and more) by moving my template creation process into a build pipeline.

What does that involve? To quote Wikipedia on Continuous delivery:

Code is compiled if necessary and then packaged by a build server every time a change is committed to a source control repository, then tested by a number of different techniques (possibly including manual testing) before it can be marked as releasable.

In more practical terms here is what I will try to cover:

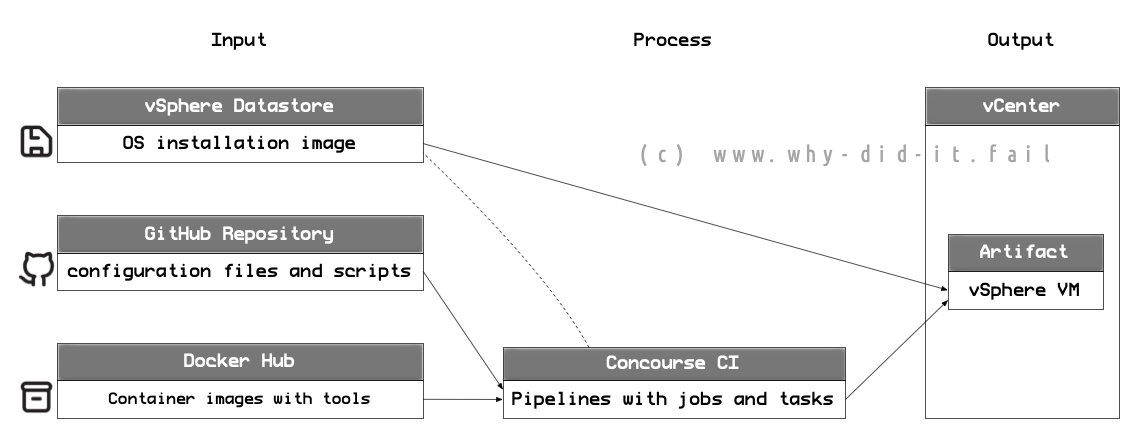

- In the first step, any template will be defined as a a set of configuration files (code) for packer.

Packer from Hasicorp is an awesome tool to create machine images (templates) in an automated fashion - The configuration files will be stored on GitHub along with all required scripts for further steps in the pipeline build.

- The pipeline will pull the configuration files from git and put these as input into container images which execute all required tasks. The container images in turn will be pulled from docker hub.

- For now the pipeline will run manually but it can be later changed to different trigger, e.g if code is released to the master branch.

The beauty of this approach is that the pipeline can be extended with everything you can think of:

Running a version scheme to mark the old template release, distributing the template to multiple locations, status updates in slack, running unit tests, putting new OS images into the datastore and so on.

Prerequisites

To follow along you should take care of the following tasks at this point:

- Create a docker hub login

- Create a git hub login (to store your own configuration)

- When using github I recommend that you use a machine user with a dedicated ssh key

- you can also use any other git service, e.g. an on-premises gitlab

- Prepare a docker host and include docker-compose

- I used a dedicated linux virtual machine (two vCPU, 6 GB RAM) but this could also be your local workstation

Setting up the foundation: Installing the continuous integration software

Concourse CI will run the pipeline, at the time of writing the latest release is v5.8.0. Concourse is fully open source, looks quite nice, the config can be stored in git, running it containers gets you started quickly and it uses containers as runners which provide a lot of flexibility (and created a lot of headaches in hindsight).

Basic setup

Login to your docker host, get the docker compose file and open it for editing.

wget https://concourse-ci.org/docker-compose.yml

vi docker-compose.yml

Adjust the configuration file with your credentials as well as the FQDN/IP of your docker host so that you can access Concourse from your workstation.

version: '3'

[...]

web:

[...]

environment:

CONCOURSE_EXTERNAL_URL: http://<YOUR FQDN or IP>:8080

[...]

CONCOURSE_ADD_LOCAL_USER: <USERNAME>:<PASSWORD>

CONCOURSE_MAIN_TEAM_LOCAL_USER: <USERNAME>

[...]

Once ready, you can start by issuing

sudo docker-compose up -d

When the process is finished the Web UI should be available at whatever you specified under CONCOURSE_EXTERNAL_URL.

Hint: If you are using a newer version of Ubuntu be aware of bug 1796119.

Try to login and then run the Hello World example to make sure everything is in order.

Summary

I hope that I was able to cover my motivation for going on this journey and spark your interest in the area of automation.

Next, part 2 will cover the setup of the build pipeline for a OS image.

Comments