While bringing myself up-to-date with NSX-T I struggled with the terminology in relation to my existing NSX-v knowledge. There are quite a few awesome blog posts around -v or -T and I am trying to compare the “edge” at a high level.

I hesitated to make a public post out of it since I am myself quite new to NSX-T and my post may not be 100% correct, but then figured that I can just learn from the feedback. So here are my notes with the risk that someone else has done the same already and that my post is full of errors.

NSX-v

In NSX-v the term edge usually refers to the edge services gateway (ESG) although, strictly speaking, the control VM for the distributed logical router (DLR) is also an edge (but we leave that aside for now).

The edge (ESG) is always deployed as a virtual machine on a vSphere cluster and the main focus is the handling of north/south traffic as well as upper layer services. Therefore the ESG provides routing capabilities, dynamic or static, between your virtual and physical networking infrastructure and can offer load balancing, VPN, DHCP, …

The NSX manager takes care of the full lifecycle for the VM. To handle increased throughput or to provide sufficient resources to the networking services the edge comes in different sizing flavours.

An edge cluster in NSX-v is essentially a vSphere cluster that hosts the NSX edge VMs. That cluster must

- be part of the NSX-managed vCenter

- be connected to the NSX transport zones

- have one or more VLANs are assigned to the physical NICs of the ESXi hosts for uplink connectivity to the physical network infrastructure

In this cluster the edge VMs usually peer with a physical router over a dynamic routing protocol. Apart from methods like active/standby edges or ECMP, the vSphere cluster contributes by vSphere HA to the availability of the solutions.

All traffic going to or coming from the physical network infrastructure therefore passes through the edges and hosts on this cluster.

NSX-T

In NSX-T it is a bit different:

The purpose of NSX Edge is to provide computational power to deliver IP routing and services.

This means the edge itself does not deliver the capabilities of an ESG, but rather provides the resources to run these functions as part of another instance (which we will discuss later on). So basically the NSX-T edge is like an ESXi hypervisor - just for network functions.

Edges in NSX-T therefore:

- Provide compute capacity for instances that host network services and north-source routing

- can be a deployed as a VM to a compute manager (vSphere only)

- but edges can also be bare-metal instances (very strong design constraints)

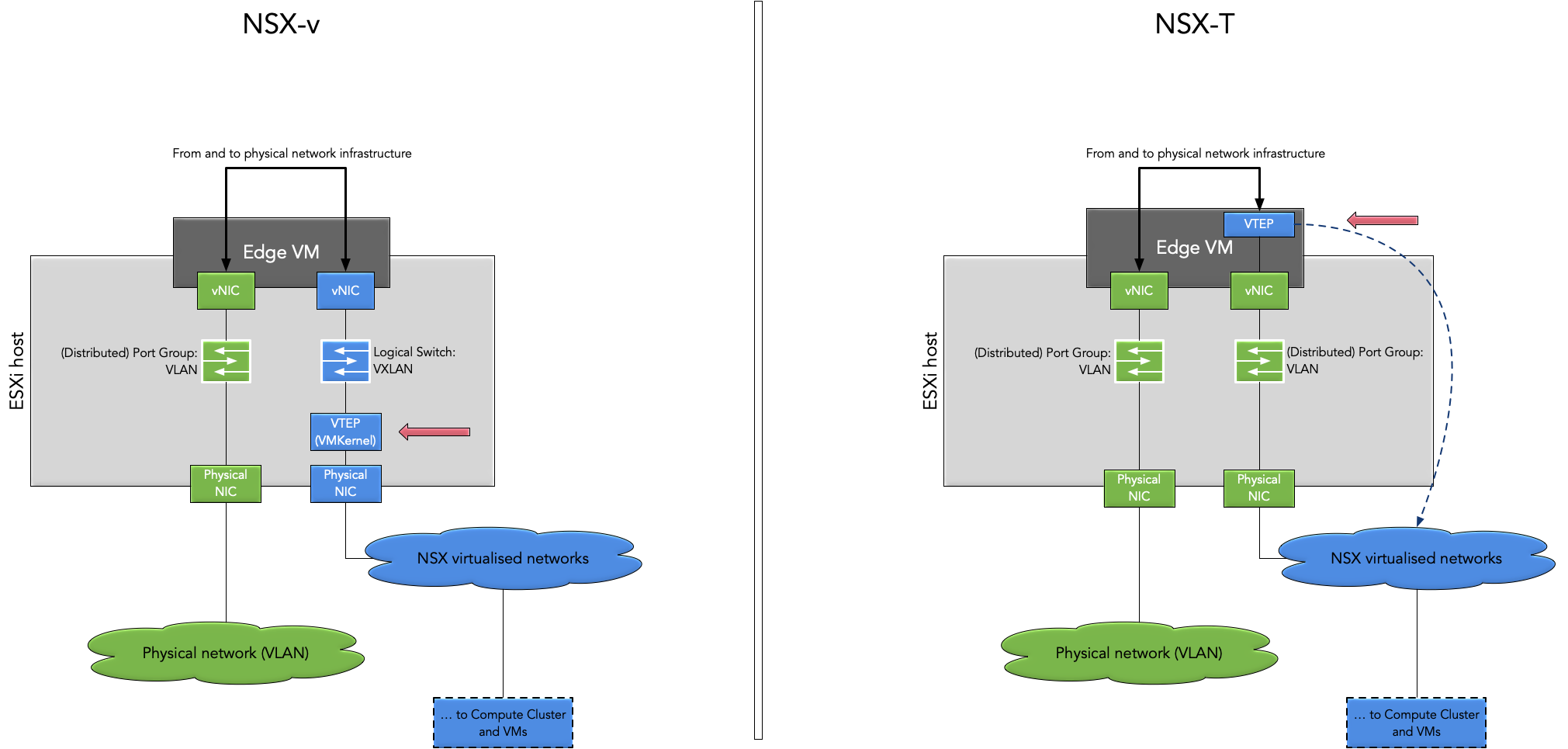

When you think of bare-metal edges the following just makes sense, but it is often overlooked: Edges in NSX-T are transport nodes and therefore part of the transport zone. They do not need to be on an NSX-enabled cluster like in NSX-v.

A (very) simple diagram of the different termination points for the overlay tunnel in NSX-v vs. NSX-T

Now another aspect to look is the availability of the edge, this is provided by aggregating edge nodes into edge clusters. With NSX-T an edge cluster is therefore no longer a vSphere cluster that hosts the edges, but an aggregation of multiple edges to achieve availability and performance goals. And yes, you can have multiple edge clusters for various reasons :-)

And what is this instance the edge node provides the compute capacity for? Well, basically for stateful services as part of a service router (SR). And what is that now? Let’s look at a few terms that come up in the NSX-T world:

- Logical router

- Service router

- Distributed router

- Tier0- and Tier1-gateway

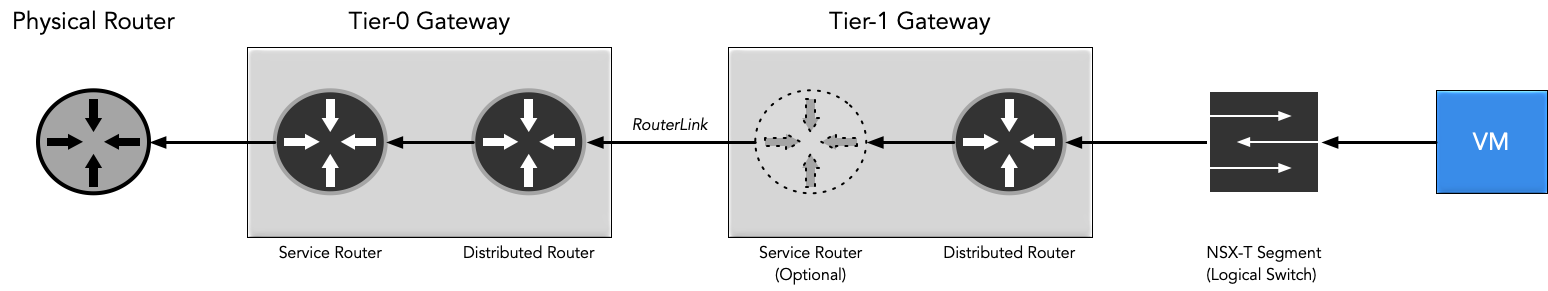

The network path from a VM to the physical network infrastructure (I omitted some elements for clarity)

In NSX-T a logical router is more like a concept or description not a function or a specific instance (I would argue that more often the combination of service- and distributed router is called a logical router). Two aspects of logical routing are the independence (decoupling) from underlying hardware (who would have guessed that from VMware?) and multi-tenant support. Implemented are these things by Tier-0 and Tier-1 gateways.

Tier-0 and Tier-1 gateways consist each of distributed router and service router (with an exception to the rule). The term Tier-0 and Tier-1 itself can, just to mention one aspect, be described as the relative position of the gateway in terms of infrastructure placement:

The Tier-0 gateway is closest to the physical infrastructure and often referred to as “Provider gateway”. As such the Tier-0 gateway takes care of the connectivity towards the physical network, either with static or dynamic routing. Therefore the Tier-0 gateway is in general used for north-south traffic.

Implementing a Tier-0 gateway requires an edge cluster with at least one node because we have elements here that cannot be part of a distributed router (i.e. physical uplink connectivity) and need a service router.

The Tier-1 gateway is the “tenant gateway” and must be connected to a Tier-0 gateway by a RouterLink, it cannot connect to the “outside” on its own. On the other hand, a Tier-1 gateway can only be connected to a single Tier0-gateway at a time.

A Tier1-gateway would not need an edge cluster unless we implement stateful services. However, if you select an edge cluster a SR will be created automatically, so think twice before doing this. The Tier-1 gateway handles mostly east-west traffic and is usually the default gateway for your virtual machines in the segments.

Now that we know of gateways what kind of routers are there?

A distributed router is that what you would refer in NSX-v as Distributed Logical Router (DLR), a router that sits in every transport node (most of the times ESXi host) and enables the routing at the source hypervisor. This concerns all your traffic within the NSX world, the traffic flow is not visible from the outside and it uses the underlay as transport between the nodes.

The service router handles all the stateful services that cannot be distributed:

- Load Balancer

- VPN

- DHCP

- DNS Forwarder

- On Tier-0: Physical uplink connectivity

- …

As mentioned above, these functions are instantiated on the edge. If you got a lab (read non-prod environment), you can actually try to log into your edge and run a “docker ps” to see them. Another often underestimated fact is that both tier-gateways can run a certain set of stateful services, not the same features but still a big thing if you are coming from NSX-v.

You can probably fill a book on the topic of Tier-0 gateway designs with or without ECMP, different options for availability and so on. Here are a few blog posts that I can recommend for further reading:

- Fantastic stuff by Harikrishnan T (make sure to read his other articles as well): https://vxplanet.com/2019/07/27/nsx-t-tier0-ecmp-routing-explained/

- Rutger Bloom with some lab implementation: https://rutgerblom.com/2019/04/07/nsx-t-lab-part-5/

- Mike Da Costa has some nice posts around troubleshooting: https://vswitchzero.com/2019/02/27/nsx-t-troubleshooting-scenario-1/

- Ronald de Jong has more on Routing and NSX-T: https://my-sddc.net/2019/04/08/routing-with-nsx-t-part-1/

Closing words

The big thing is to get your head around these new concepts. Read blog posts, read the documentation, try it out. But once this is done you start to see the improvements for architecting an environment. Now you can draw a line between the infrastructure design (e.g. for management and edge) and the actual network design.

Comments